设计模式之观察者模式

本文共 6026 字,大约阅读时间需要 20 分钟。

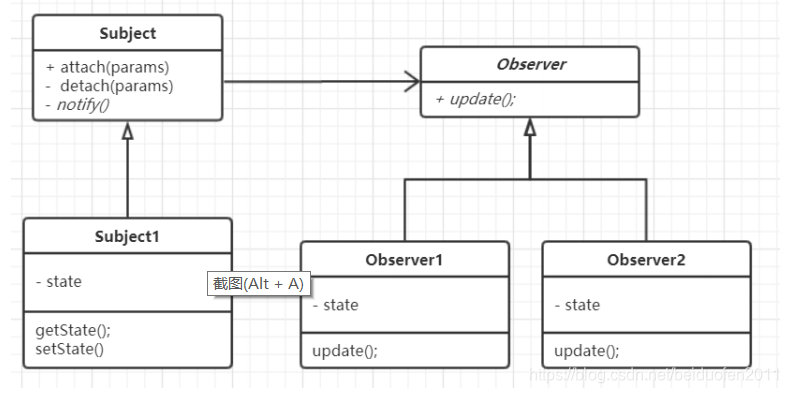

模式定义:

定义了对象之间的一对多依赖,让多个观察者对象同时监听某一个主题

对象,当主题对象发生变化时,它的所有依赖者都会收到通知并更新

应用场景:

当更改一个对象的状态可能需要更改其他对象,并且实际的对象集事先

未知或动态更改时,请使用观察者模式。

优点:

1.符合开闭原则

2. 可以在运行时建立对象之间的关系

JDK&Spring源码中的应用

1 JDK :

2 java . util . Observable

3 Spring :

4 org . springframework . context . ApplicationListener

public interface Subject { public void registerObserver(Observer o); public void removeObserver(Observer o); public void notifyObservers();} package com.fen.dou.sjms.observer;import java.util.ArrayList;/** *类是核心 *1. 包含最新的天气情况信息 *2. 含有 观察者集合,使用 ArrayList 管理 *3. 当数据有更新时,就主动的调用 ArrayList, 通知所有的(接入方)就看到最新的信息 *@author Administrator * */public class WeatherData implements Subject { private float temperatrue; private float pressure; private float humidity; //观察者集合 private ArrayList observers;//加入新的第三方 public WeatherData() { observers =new ArrayList (); } public float getTemperature() { return temperatrue; } public float getPressure() { return pressure; } public float getHumidity() { return humidity; } public void dataChange() { //调用 接入方的 update notifyObservers(); } //当数据有更新时,就调用 setData public void setData(float temperature, float pressure, float humidity) { this.temperatrue = temperature; this.pressure = pressure; this.humidity = humidity; //调用 dataChange, 将最新的信息 推送给 接入方 currentConditions dataChange(); } //注册一个观察者 @Override public void registerObserver(Observer o) { // TODO Auto-generated method stub observers.add(o); } //移除一个观察者 @Override public void removeObserver(Observer o) {// TODO Auto-generated method stub if(observers.contains(o)) { observers.remove(o); } //遍历所有的观察者,并通知 @Override public void notifyObservers() {// TODO Auto-generated method stub for(int i = 0; i < observers.size(); i++) { observers.get(i).update(this.temperatrue, this.pressure, this.humidity); } }} package com.fen.dou.sjms.observer;//观察者接口,有观察者来实现public interface Observer { public void update(float temperature, float pressure, float humidity);} package com.fen.dou.sjms.observer;public class BaiduSite implements Observer { // 温度,气压,湿度 private float temperature; private float pressure; private float humidity; // 更新 天气情况,是由 WeatherData 来调用,我使用推送模式 public void update(float temperature, float pressure, float humidity) { this.temperature = temperature; this.pressure = pressure; this.humidity = humidity; display(); } // 显 示 public void display() { System.out.println("===百度网站===="); System.out.println("***百度网站 气温 : " + temperature + "***"); System.out.println("***百度网站 气压: " + pressure + "***"); System.out.println("***百度网站 湿度: " + humidity + "***"); }} package com.fen.dou.sjms.observer;public class CurrentConditions implements Observer { // 温度,气压,湿度 private float temperature; private float pressure; private float humidity; // 更新 天气情况,是由 WeatherData 来调用,我使用推送模式 public void update(float temperature, float pressure, float humidity) { this.temperature = temperature; this.pressure = pressure; this.humidity = humidity; display(); } // 显 示 public void display() { System.out.println("***Today mTemperature: " + temperature + "***"); System.out.println("***Today mPressure: " + pressure + "***"); System.out.println("***Today mHumidity: " + humidity + "***"); }} package com.fen.dou.sjms.observer;public class Client { public static void main(String[] args) { // TODO Auto-generated method stub //创建一个 WeatherData WeatherData weatherData = new WeatherData(); //创建观察者 CurrentConditions currentConditions = new CurrentConditions(); BaiduSite baiduSite = new BaiduSite(); // 注 册 到 weatherData weatherData.registerObserver(currentConditions); weatherData.registerObserver(baiduSite); // 测 试 System.out.println("通知各个注册的观察者, 看看信息"); weatherData.setData(10f, 100f, 30.3f); weatherData.removeObserver(currentConditions); //测试System.out.println(); System.out.println("通知各个注册的观察者, 看看信息"); weatherData.setData(10f, 100f, 30.3f); }} org.springframework.context.ApplicationListener中的使用

public class MyEvent extends ApplicationContextEvent { public MyEvent(ApplicationContext source) { super(source); } public void out(String name) { System.out.println("MyEvent 事件执行了 name :"+name); }}

import org.springframework.context.ApplicationListener;public class MyListenerA implements ApplicationListener{ @Override public void onApplicationEvent(MyEvent event) { System.out.println("MyListenerA 执行了"); event.out("wangmingyuan not love"); }}

import org.springframework.context.ApplicationListener;public class MyListenerB implements ApplicationListener{ @Override public void onApplicationEvent(MyEvent event) { System.out.println("MyListenerB 执行了"); event.out("wangmingyuan not love"); }}

public class MyPublisher implements ApplicationContextAware { public ApplicationContext applicationContext; @Override public void setApplicationContext(ApplicationContext applicationContext) throws BeansException { this.applicationContext=applicationContext; } public void publisherEvent(ApplicationEvent event) { System.out.println("-----发送事件-----"+event); applicationContext.publishEvent(event); }}

@Import(value = {MyEvent.class,MyListenerA.class,MyListenerB.class,MyPublisher.class})public class EventTest { public static void main(String[] args) { AnnotationConfigApplicationContext context=new AnnotationConfigApplicationContext(EventTest.class); MyPublisher myPublisher = context.getBean(MyPublisher.class); MyEvent myEvent = context.getBean(MyEvent.class); myPublisher.publisherEvent(myEvent); }}

转载地址:http://bwwrz.baihongyu.com/

你可能感兴趣的文章

Nginx下配置codeigniter框架方法

查看>>

Nginx之二:nginx.conf简单配置(参数详解)

查看>>

Nginx代理websocket配置(解决websocket异常断开连接tcp连接不断问题)

查看>>

Nginx代理初探

查看>>

nginx代理地图服务--离线部署地图服务(地图数据篇.4)

查看>>

Nginx代理外网映射

查看>>

Nginx代理模式下 log-format 获取客户端真实IP

查看>>

Nginx代理解决跨域问题(导致图片只能预览不能下载)

查看>>

Nginx代理配置详解

查看>>

Nginx代理静态资源(gis瓦片图片)实现非固定ip的url适配网络环境映射ip下的资源请求解决方案

查看>>

Nginx代理静态资源(gis瓦片图片)实现非固定ip的url适配网络环境映射ip下的资源请求解决方案

查看>>

nginx反向代理

查看>>

nginx反向代理、文件批量改名及统计ip访问量等精髓总结

查看>>

Nginx反向代理与正向代理配置

查看>>

Nginx反向代理及负载均衡实现过程部署

查看>>

Nginx反向代理是什么意思?如何配置Nginx反向代理?

查看>>

nginx反向代理解决跨域问题,使本地调试更方便

查看>>

Nginx反向代理配置

查看>>

Nginx启动SSL功能,并进行功能优化,你看这个就足够了

查看>>

nginx启动脚本

查看>>